About

I received my Ph.D. degree at East China University of Science and Technology (ECUST) in 2012, supervised by Prof. Jiajun Lin. I received my B.S. degree at ECUST in 2007. My research interests include saliency detection, camouflaged object detection, multi-modal image segmentation, and computer vision.

Selected Papers

2025

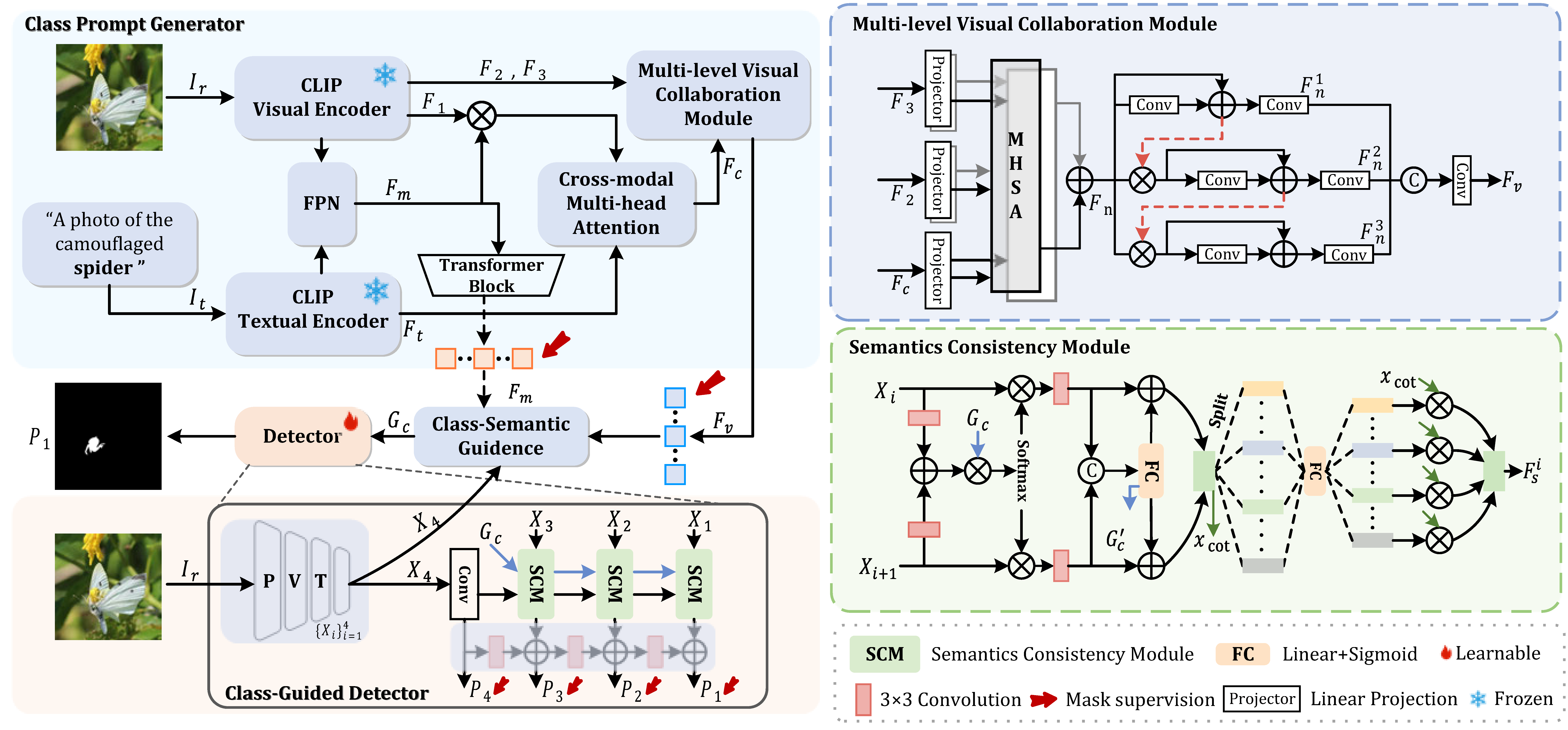

CGCOD: Class-Guided Camouflaged Object Detection Chenxi Zhang,

Qing Zhang*, Jiayun Wu, Youwei Pang*

ACM International Conference on Multimedia (

ACM MM), 2025

[

CCF-A] [

Code]

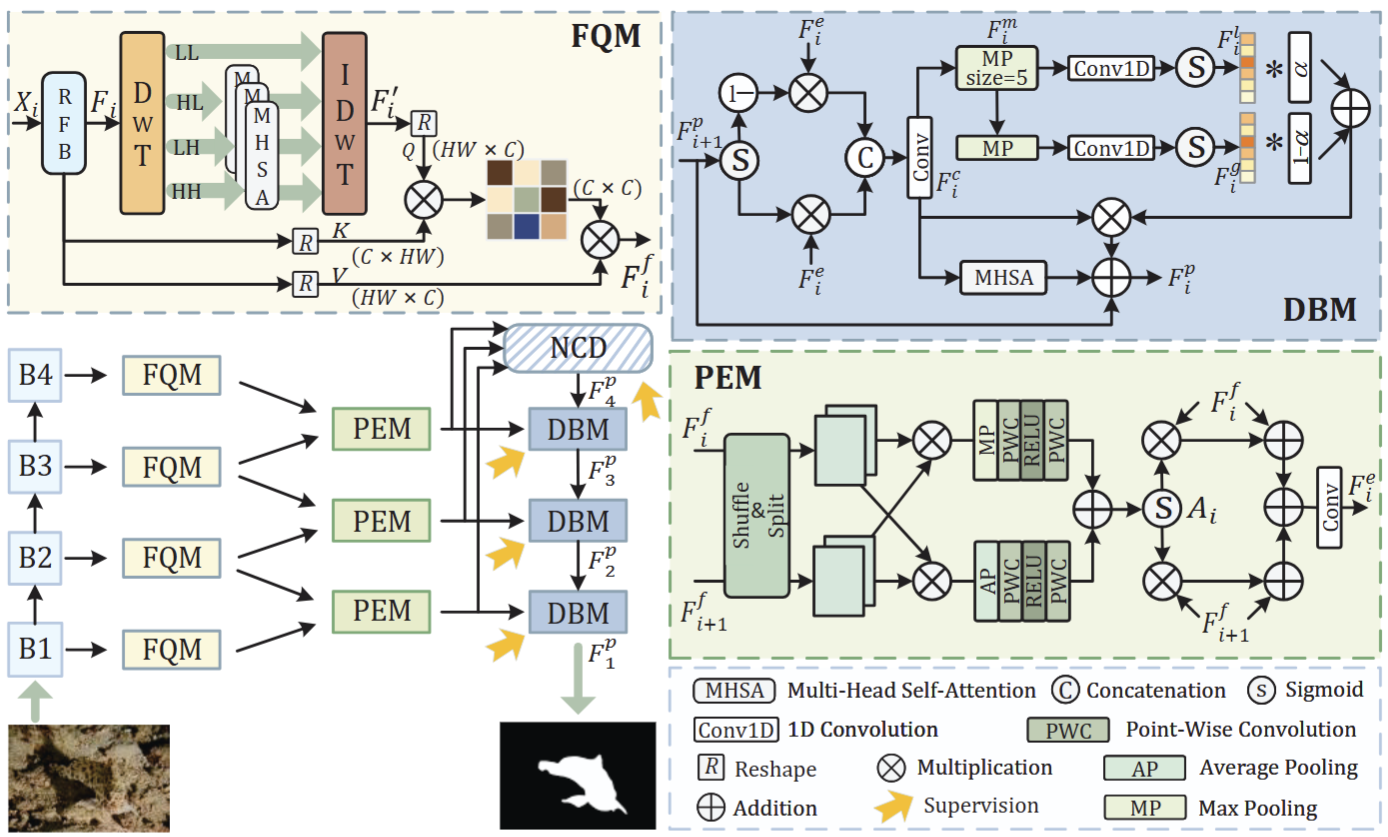

Frequency-guided Camouflaged Object Detection with Perceptual Enhancement and Dynamic Balance Yuetong Li, Yilin Zhao,

Qing Zhang*, Qiangqiang Zhou, Yanjiao Shi

IEEE International Conference on Multimedia & Expo (

ICME), 2025

[

CCF-B] [

Code]

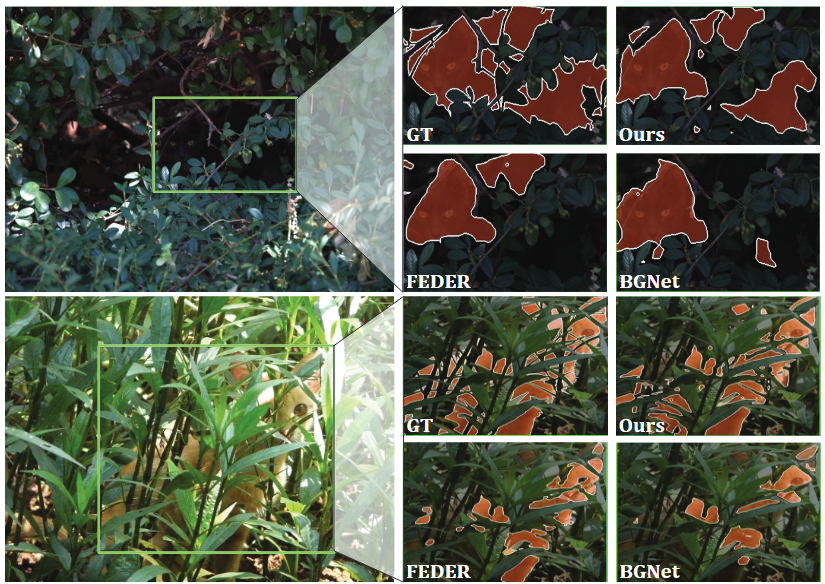

Rethinking Camouflaged Object Detection via Foreground-background Interactive Learning Chenxi Zhang,

Qing Zhang*, Jiayun Wu

International Conference on Acoustics, Speech, and Signal Processing (

ICASSP), 2025

[

CCF-B ] [

Code] [

PDF]

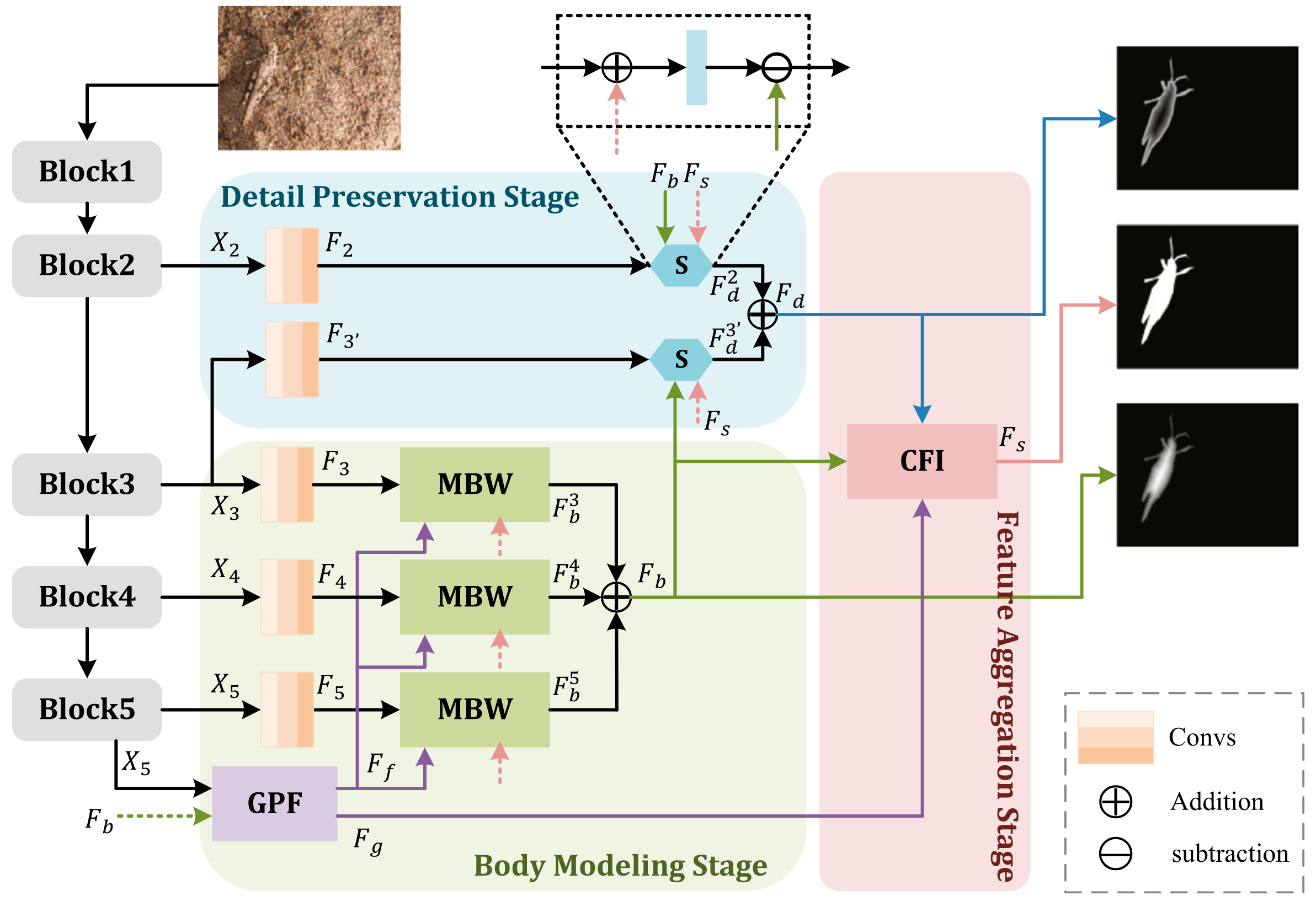

Bilateral Decoupling Complementarity Learning Network for Camouflaged Object Detection Rui Zhao, Yuetong Li,

Qing Zhang*, Xinyi Zhao

Knowledge-Based Systems (

KBS), 2025, 314: 113158

[

中科院1区, Top ] [

PDF]

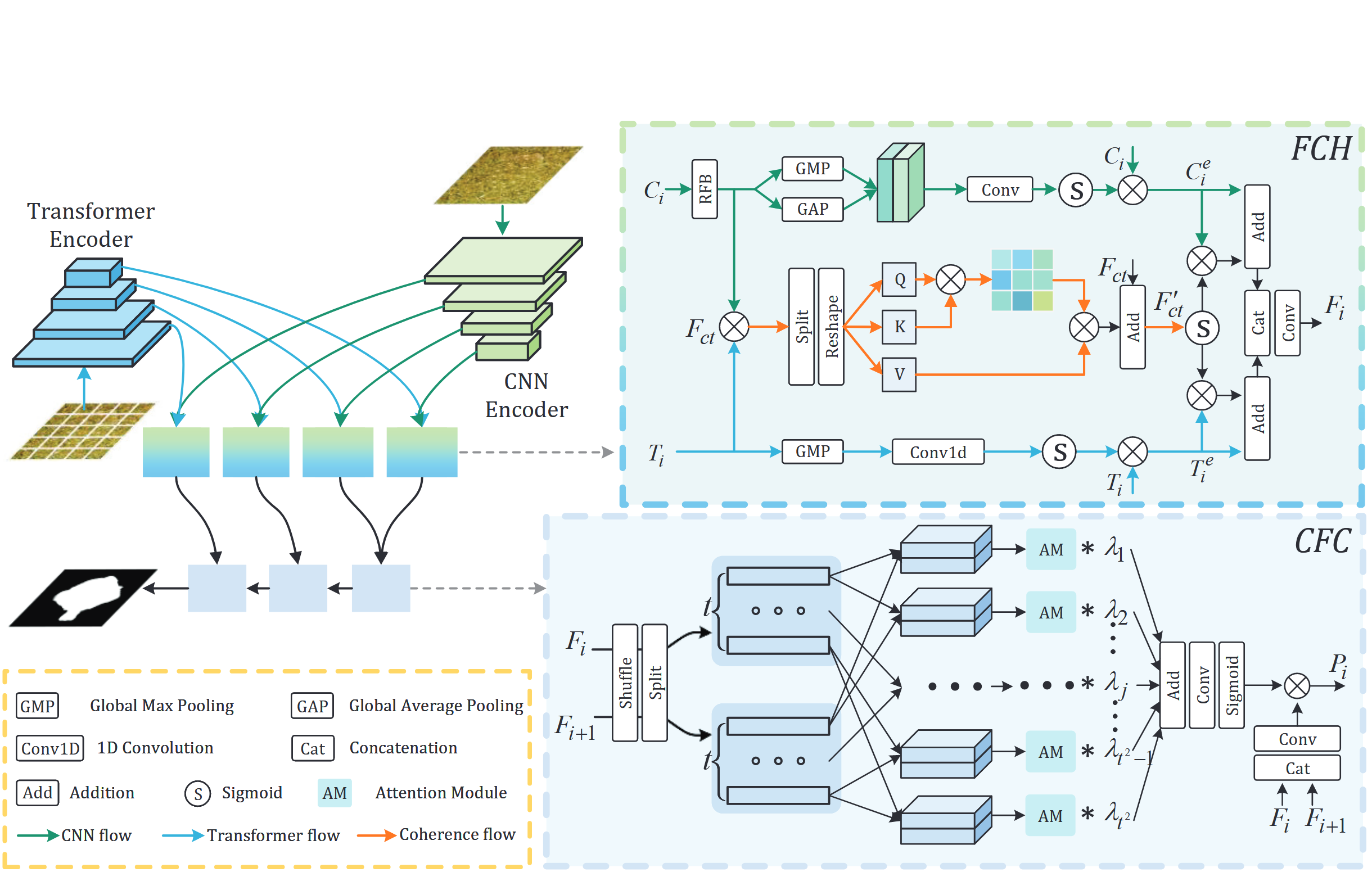

Camouflaged Object Detection with CNN-Transformer Harmonization and Calibration Yilin Zhao,

Qing Zhang*, Yuetong Li

International Conference on Acoustics, Speech, and Signal Processing (

ICASSP), 2025

[

CCF-B ] [

PDF]

Camouflaged Object Detection via Frequency-aware Localization Perception and Boundary-aware Detail Enhancement

Chenxi Zhang, Qing Zhang*, Wei He*, Jiayun Wu, Chenyu Zhuang

IEEE Transactions on Consumer Electronics (TCE), 2025

[中科院2区]

FLRNet: A Bio-inspired Three-stage Network for Camouflaged Object Detection via Filtering, Localization and Refinement Yilin Zhao,

Qing Zhang*, Yuetong Li

Neurocomputing (

NEURO), 2025, 626: 129523

[

中科院2区] [

PDF]

HRPVT: High-Resolution Pyramid Vision Transformer for Medium and Small-scale Human Pose Estimation Zhoujie Xu, Meng Dai*,

Qing Zhang, Xiaodi Jiang

Neurocomputing (

NEURO), 2025, 619(28): 129154

[

中科院2区] [

PDF]

A Dual-stream Learning Framework for Weakly Supervised Salient Object Detection with Multi-strategy Integration Yuyan Liu,

Qing Zhang*, Yilin Zhao, Yanjiao Shi

The Visual Computer (

TVC), 2025

[

中科院3区 ] [

Code] </span>] [

PDF]

FGNet: Feature Calibration and Guidance Refinement for Camouflaged Object Detection Qiang Yu,

Qing Zhang*, Yanjiao Shi, Qiangqiang Zhou

International Joint Conference on Neural Networks (

IJCNN), 2025

[

CCF-C ] [

Code]

HEFNet: Hierarchical Unimodal Enhancement and Multi-modal Fusion for RGB-T Salient Object Detection Jiayun Wu,

Qing Zhang*, Chenxi Zhang, Yanjiao Shi, Qiangqiang Zhou

International Joint Conference on Neural Networks (

IJCNN), 2025

[

CCF-C ] [

Code]

Dual-domain Collaboration Learning Network for Camouflaged Object Detection Jingming Wang,

Qing Zhang*, Xinyi Zhao, Yanjiao Shi, Qiangqiang Zhou

International Joint Conference on Neural Networks (

IJCNN), 2025

[

CCF-C ] [

Code]

Direction-Oriented Edge Reconstruction Network for Camouflaged Object Detection Xiaoxu Yang,

Qing Zhang*, Qiangqiang Zhou

International Joint Conference on Neural Networks (

IJCNN), 2025

[

CCF-C ] [

Code]

Boundary-and-Object Collaborative Learning Network for Camouflaged Object Detection Chenyu Zhuang,

Qing Zhang*, Chenxi Zhang, Xinxin Yuan

Image and Vision Computing (

IMAVIS), 2025, 161: 105596

[

中科院3区 ] [

Code] [

PDF]

FINet: Detecting Camouflaged Objects Using Frequency-aware Information

Chengfeng Zhu, Zeming Liu, Qing Zhang*

The Chinese Conference on Pattern Recognition and Computer Vision (PRCV), 2025

[CCF-C]

SAM2-LPNet: Saliency Guided and Laplacian Aware Fine-tuning of SAM2 for Weakly Supervised Salient Object Detection

The Chinese Conference on Pattern Recognition and Computer Vision (PRCV), 2025

[ CCF-C ]

Collaborative Perception and Dual-stage Decoder Network for Camouflaged Object Detection

The Chinese Conference on Pattern Recognition and Computer Vision (PRCV), 2025

[ CCF-C ]

Intra- and Inter-Group Mutual Learning Network for Lightweight Camouflaged Object Detection Chenyu Zhuang,

Qing Zhang* International Conference on Intelligent Computing (

ICIC), 2025: 164-176

[

CCF-C ] [

PDF]

Rethinking Lightweight and Efficient Human Pose Esrimation with Star Operation Reconstruction

Xu Zhoujie, Meng Dai*, Qing Zhang, Huawen Liu

International Conference on Knowledge Science, Engineering and Management (KSEM), 2025

[CCF-C ]

A Camouflaged Object Dection Network with Global Cross-space Perception and Flexible Local Feature Refinement Network

Zhenjie Ji, Yanjiao Shi*, Qing Zhang, Qiangqiang Zhou

International Conference on Artificial Neural Networks (ICANN), 2025

[CCF-C ]

2024

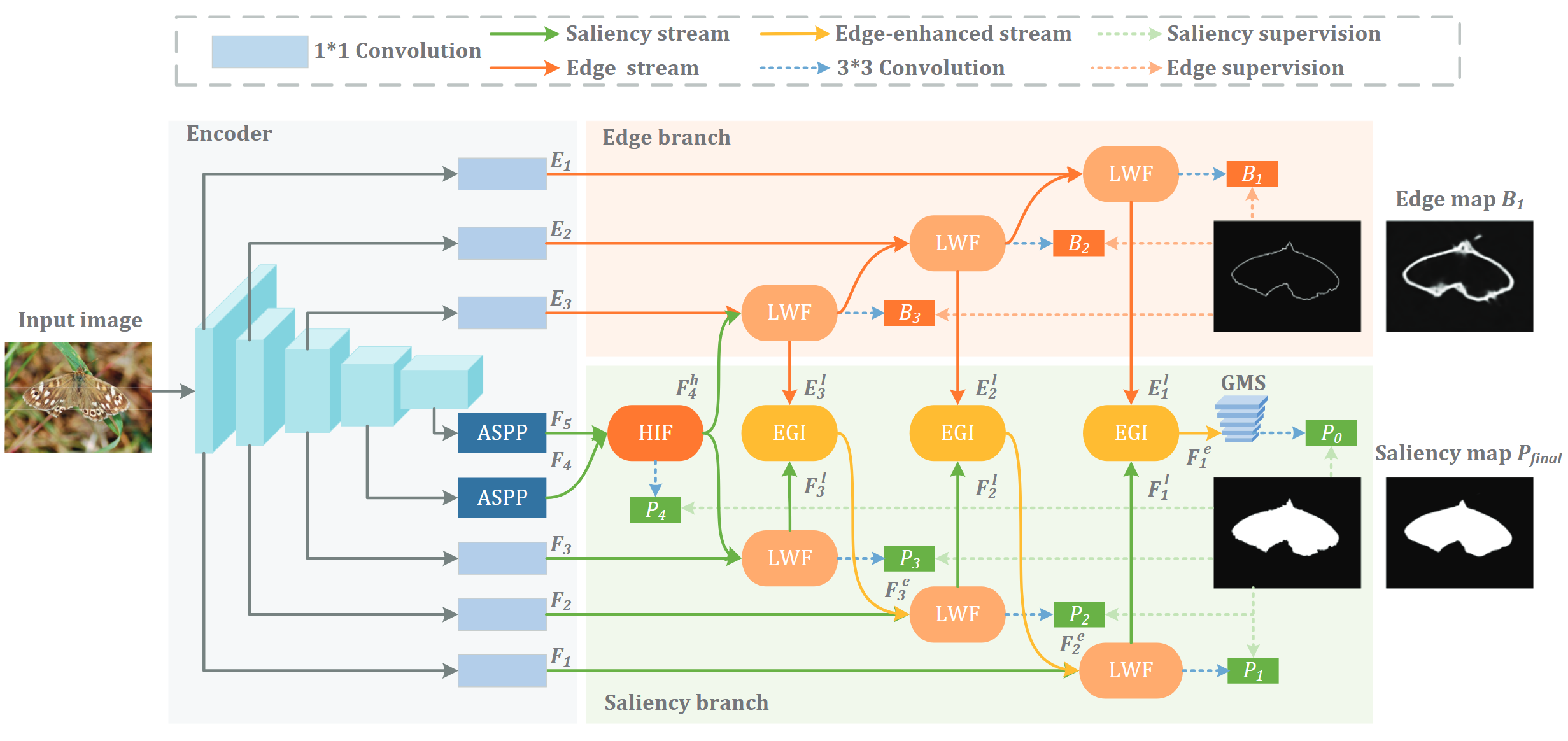

Salient Object Detection with Edge-guided Learning and Specific Aggregation Liqian Zhang,

Qing Zhang* IEEE Transactions on Circuits and Systems for Video Technology (

TCSVT), 2024, 34(1): 534-548

[

中科院1区, Top ] [

Code] [

PDF]

Bi-directional Boundary-object Interaction and Refinement Network for Camouflaged Object Detection Jicheng Yang,

Qing Zhang*, Yilin Zhao, Yuetong Li, Zeming Liu

IEEE International Conference on Multimedia and Expo (

ICME), 2024

[

CCF-B ] [

Code] [

PDF]

Detecting Camouflaged Object via Cross-level Context Supplement Qing Zhang*, Weiqi Yan, Rui Zhao, Yanjiao Shi

Applied Intelligence, 2024

[

中科院3区 ] [

Code] [

PDF]

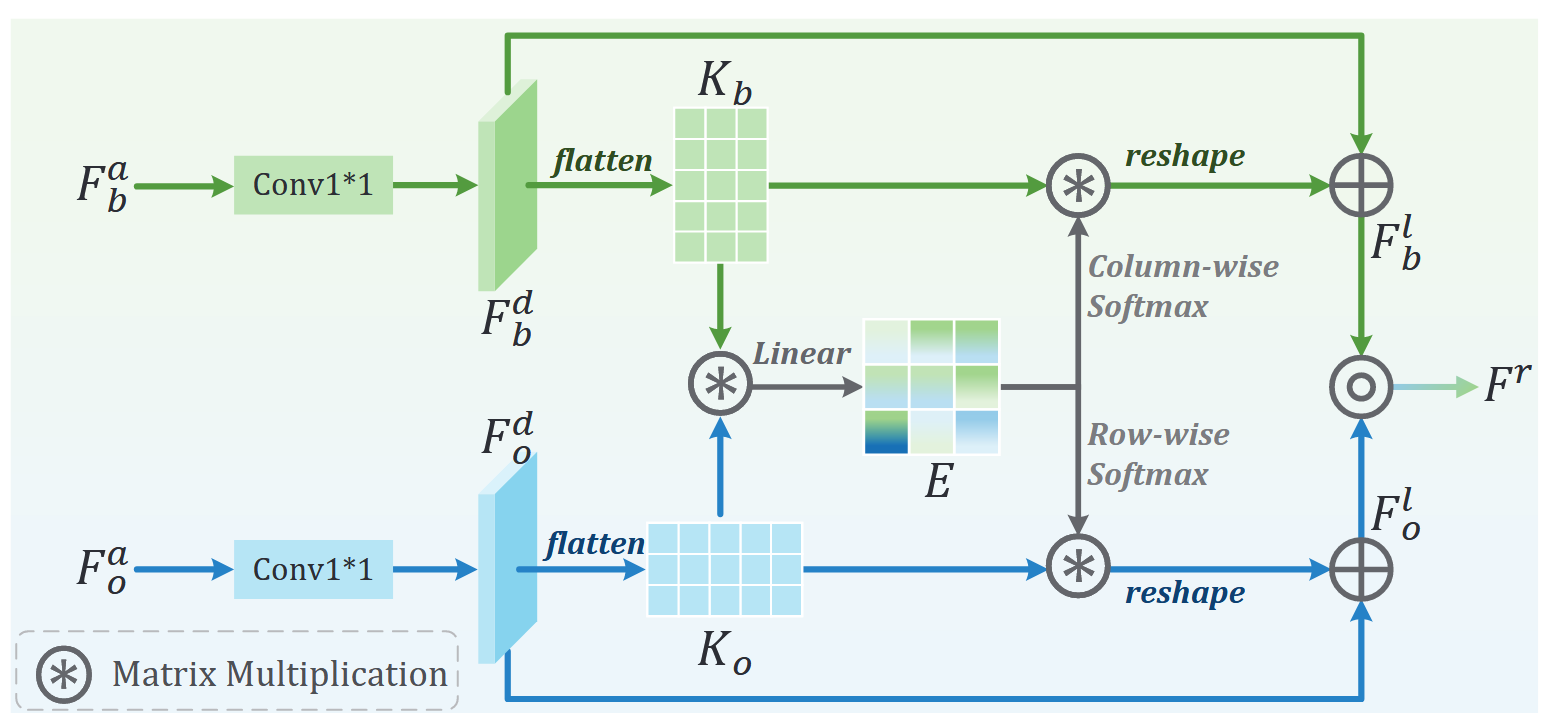

BMFNet: Bifurcated Multi-Modal Fusion Network for RGB-D Salient Object Detection Chenwang Sun,

Qing Zhang*, Chenyu Zhuang, Mingqian Zhang

Image and Vision Computing (

IMAVIS), 2024, 147: 105048

[

中科院3区 ] [

Code] [

PDF]

Frequency Learning Network with Dual-Guidance Calibration for Camouflaged Object Detection Yilin Zhao,

Qing Zhang*, Yuetong Li

Asian Conference on Computer Vision (

ACCV), 2024.

[

CCF-C ] [

Code] [

PDF]

Transformer-based Depth Optimization Network for RGB-D Salient Object Detection Lu Li, Yanjiao Shi*, Jingyu Yang, Qiangqiang Zhou,

Qing Zhang, Liu Cui

International Conference on Pattern Recognition (

ICPR), 2024.

[

CCF-C ] [

PDF]

AFINet: Camouflaged object detection via attention fusion and interaction network Qing Zhang, Weiqi Yan, Yilin Zhao, Qi Jin, Yu Zhang*

Journal of Visual Communication and Image Representation (

JVCIR), 2024

[

中科院4区 ] [

Code] [

PDF]

Boosting transferability of adversarial samples via saliency distribution and frequency domain enhancement Yixuan Wang, Wei Hong, Xueqin Zhang*,

Qing Zhang, Chunhua Gu

Knowledge-Based Systems (

KBS), 2024, 300: 112152

[

中科院1区, Top ] [

PDF]

Light-sensitive and adaptive fusion network for RGB-T crowd counting Liangjun Huang*, Wencan Kang, Guangkai Chen,

Qing Zhang, Jianwei Zhang

The Visual Computer (

TVC), 2024, 40(10): 7279-7292

[

中科院3区 ] [

PDF]

Multi-branch feature fusion and refinement network for salient object detection Jinyu Yang, Yanjiao Shi*, Jin Zhang, Qianqian Guo,

Qing Zhang, Liu Cui

Multimedia Systems, 2024, 30(4): 190

[

中科院4区 ] [

PDF]

D2Net: discriminative feature extraction and details preservation network for salient object detection Qianqian Guo, Yanjiao Shi*, Jin Zhang, Jinyu Yang,

Qing Zhang Journal of Electronic Imaging (

JEI), 2024, 33(4): : 043047

[

中科院4区 ] [

PDF]

2023

CFANet: A Cross-layer Feature Aggregation Network for Camouflaged Object Detection

Qing Zhang*, Weiqi Yan

IEEE International Conference on Multimedia and Expo (ICME), 2023: 2441-2446

[ CCF-B ]

[PDF] [Code]

TCRNet: a trifurcated cascaded refinement network for salient object detection

Qing Zhang*, Rui Zhao, Liqian Zhang

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2023, 33(1): 298-311

[ 中科院1区, Top ]

[PDF] [Code]

Polyp-Mixer: An Efficient Context-Aware MLP-based Paradigm for Polyp Segmentation

Jinghui Shi, Qing Zhang*, Yuhao Tang, Zhongqun Zhang

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2023, 33(1): 30-42

[ 中科院1区, Top ]

[PDF]

Depth cue enhancement and guidance network for RGB-D salient object detection

Xiang Li, Qing Zhang*, Weiqi Yan, Meng Dai

Journal of Visual Communication and Image Representation (JVCIR), 2023, 95: 103880

[ 中科院4区 ]

[PDF] [Code]

Motion Analysis and Reconstruction of Human Joint Regions for Sparse RGBD Images

Tianzhen Dong, Yuntao Bai, Qing Zhang, Yi Zhang

IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 2023: 773-774

[ CCF-A ]

[PDF]

SC2Net: Scale-aware Crowd Counting Network with Pyramid Dilated Convolution Lanjun Liang*, Huailin Zhao, Fangbo Zhou, Qing Zhang, Zhili Song, Qingxuan Shi

Applied Intelligence, 2023, 53(5): 5146-5159

[ 中科院3区 ]

2022

Progressive dual-attention residual network for salient object detection

Liqian Zhang, Qing Zhang*, Rui Zhao

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), 2022, 32(9): 5902-5915

[ 中科院1区, Top ]

[PDF] [Code]

Residual attentive feature learning network for salient object detection

Qing Zhang*, Yanjiao Shi, Xueqin Zhang, Liqian Zhang

Neurocomputing (NEURO), 2022, 51:741-752

[ 中科院2区 ]

[PDF]

Cross-modal and multi-level feature refinement network for RGB-D salient object detection

Yue Gao, Meng Dai*, Qing Zhang

The Visual Computer (TVC), 2022, 39: 3979-3994

[ 中科院3区 ]

[PDF]

COMAL: compositional multi-scale feature enhanced learning for crowd counting

Fangbo Zhou, Huailin Zhao*, Yani Zhang, Qing Zhang, Lanjun Liang, Yaoyao Li, Zuodong Duan

Multimedia Tools and Applications, 2022, 81(15): 20541-20560

[ 中科院4区 ]

[PDF]

R2Net: Residual refinement network for salient object detection

Jin Zhang, Qiuwei Liang, Qianqian Guo, Jinyu Yang, Qing Zhang, Yanjiao Shi*

Image and Vision Computing (IMAVIS), 2022, 120: 104423

[ 中科院3区 ]

[PDF]

Attention guided contextual feature fusion network for salient object detection

Jin Zhang, Yanjiao Shi*, Qing Zhang, Liu Cui, Ying Chen, Yugen Yi

Image and Vision Computing (IMAVIS), 2022, 117: 104337

[ 中科院3区 ]

[PDF]

2021

Global and local information aggregation network for edge-aware salient object detection

Qing Zhang*, Liqian Zhang, Dong Wang, Yanjiao Shi, Jianjun Lin

Journal of Visual Communication and Image Representation (JVCIR), 2021, 81: 103350

[ 中科院4区 ]

[PDF]

Deep saliency detection via spatial-wise dilated convolutional attention

Wenzhao Cui, Qing Zhang*, Baochuan Zuo

Neurocomputing, 2021, 445: 35-49

[ 中科院2区 ]

[PDF]

Edge-aware salient object detection network via context guidance

Xiaowei Chen, Qing Zhang*, Liqian Zhang

Image and Vision Computing (IMAVIS), 2021, 110: 104166

[ 中科院3区 ]

[PDF]

Salient object detection network with multi-scale feature refinement and boundary feedback

Qing Zhang*, Xiang Li

Image and Vision Computing (IMAVIS), 2021, 116: 104326

[ 中科院3区 ]

[PDF]

2020

Attention and boundary guided salient object detection

Qing Zhang*, Yanjiao Shi, Xueqin Zhang

Pattern Recognition (PR), 2020, 107: 107-484

[PDF]

Attentive feature integration network for detecting salient objects in images

Qing Zhang*, Wenzhao Cui, Yanjiao Shi, Xueqin Zhang

Neurocomputing, 2020, 411: 268-281

[PDF]

2019 and Before

Multi-level and multi-scale deep saliency network for salient object detection

Qing Zhang*, Jiajun Lin, Jingjing Zhuge, Wenhao Yuan

Journal of Visual Communication and Image Representation (JVCIR), 2019, 59: 415-424

Kernel null-space-based abnormal event detection using hybrid motion information

Yanjiao Shi, Yugen Yi, Qing Zhang*, Jiangyan Dai

Journal of Electronic Imaging (JEI), 2019, 28(2): 021011

Hierarchical Salient Object Detection Network with Dense Connections

Qing Zhang*, Jianchen Shi, Baochuan Zuo, Meng Dai, Tianzhen Dong, Xiao Qi

International Conference on Image and Graphics (ICIG), 2019: 454-466

Salient object detection via compactness and objectness cues

Qing Zhang*, Jiajun Lin, Wenju Li, Yanjiao Shi, Guogang Cao

The Visual Computer (TVC), 2018, 34(4): 473-489

Salient object detection via color and texture cues

Qing Zhang*, Jiajun Lin, Yanyun Tao, Wenju Li, Yanjiao Shi

Neurocomputing (NEURO), 2017, 3(21): 35-48

Two-stage absorbing markov chain for salient object detection

Qing Zhang*, Desi Luo, Wenju Li, Yanjiao Shi, Jiajun Lin

International Conference on Image Processing (ICIP), 2017: 895-899

A systematic EHW approach to the evolutionary design of sequential circuits

Yanyun Tao*, Qing Zhang, Lijun Zhang, Yuzhen Zhang

Soft Computing, 2013, 20(12): 5025-5038

Salient Object Detection via Structure Extraction and Region Contrast

Qing Zhang*, Jiajun Lin, Xiaodan Li

Journal of Information Science and Engineering (JISE),2016, 32(6): 1435-1454

Saliency-based abnormal event detection in crowded scenes

Yanjiao Shi*, Yunxiang Liu, Qing Zhang, Yugen Yi, Wenju Li

Journal of Electronic Imaging (JEI), 2016, 25(6): 61608

Structure extraction and region contrast based salient object detection

Qing Zhang*, Jiajun Lin, Zhigang Xie

International Conference on Digital Image Processing (ICDIP), 2016.

Exemplar-based image inpainting using color distribution analysis

Qing Zhang*, Jiajun Lin

Journal of Information Science and Engineering (JISE), 2012, 28(4): 641-654.

Image inpainting via variation of variances and linear weighted filling-in

Qing Zhang*, Jiajun Lin

Optical Engineering, 2011, 50(7): 077001.

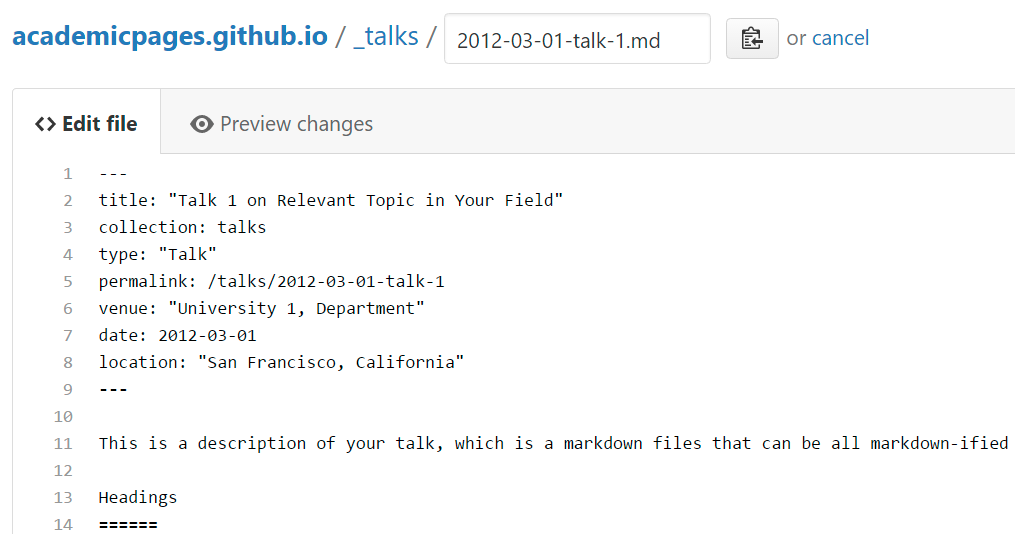

A data-driven personal website ====== Like many other Jekyll-based GitHub Pages templates, Academic Pages makes you separate the website's content from its form. The content & metadata of your website are in structured markdown files, while various other files constitute the theme, specifying how to transform that content & metadata into HTML pages. You keep these various markdown (.md), YAML (.yml), HTML, and CSS files in a public GitHub repository. Each time you commit and push an update to the repository, the [GitHub pages](https://pages.github.com/) service creates static HTML pages based on these files, which are hosted on GitHub's servers free of charge. Many of the features of dynamic content management systems (like Wordpress) can be achieved in this fashion, using a fraction of the computational resources and with far less vulnerability to hacking and DDoSing. You can also modify the theme to your heart's content without touching the content of your site. If you get to a point where you've broken something in Jekyll/HTML/CSS beyond repair, your markdown files describing your talks, publications, etc. are safe. You can rollback the changes or even delete the repository and start over - just be sure to save the markdown files! Finally, you can also write scripts that process the structured data on the site, such as [this one](https://github.com/academicpages/academicpages.github.io/blob/master/talkmap.ipynb) that analyzes metadata in pages about talks to display [a map of every location you've given a talk](https://academicpages.github.io/talkmap.html). Getting started ====== 1. Register a GitHub account if you don't have one and confirm your e-mail (required!) 2. Fork [this template](https://github.com/academicpages/academicpages.github.io) by clicking the "Use this template" button in the top right. 3. Go to the repository's settings (rightmost item in the tabs that start with "Code", should be below "Unwatch"). Rename the repository "[your GitHub username].github.io", which will also be your website's URL. 4. Set site-wide configuration and create content & metadata (see below -- also see [this set of diffs](http://archive.is/3TPas) showing what files were changed to set up [an example site](https://getorg-testacct.github.io) for a user with the username "getorg-testacct") 5. Upload any files (like PDFs, .zip files, etc.) to the files/ directory. They will appear at https://[your GitHub username].github.io/files/example.pdf. 6. Check status by going to the repository settings, in the "GitHub pages" section Site-wide configuration ------ The main configuration file for the site is in the base directory in [_config.yml](https://github.com/academicpages/academicpages.github.io/blob/master/_config.yml), which defines the content in the sidebars and other site-wide features. You will need to replace the default variables with ones about yourself and your site's github repository. The configuration file for the top menu is in [_data/navigation.yml](https://github.com/academicpages/academicpages.github.io/blob/master/_data/navigation.yml). For example, if you don't have a portfolio or blog posts, you can remove those items from that navigation.yml file to remove them from the header. Create content & metadata ------ For site content, there is one markdown file for each type of content, which are stored in directories like _publications, _talks, _posts, _teaching, or _pages. For example, each talk is a markdown file in the [_talks directory](https://github.com/academicpages/academicpages.github.io/tree/master/_talks). At the top of each markdown file is structured data in YAML about the talk, which the theme will parse to do lots of cool stuff. The same structured data about a talk is used to generate the list of talks on the [Talks page](https://academicpages.github.io/talks), each [individual page](https://academicpages.github.io/talks/2012-03-01-talk-1) for specific talks, the talks section for the [CV page](https://academicpages.github.io/cv), and the [map of places you've given a talk](https://academicpages.github.io/talkmap.html) (if you run this [python file](https://github.com/academicpages/academicpages.github.io/blob/master/talkmap.py) or [Jupyter notebook](https://github.com/academicpages/academicpages.github.io/blob/master/talkmap.ipynb), which creates the HTML for the map based on the contents of the _talks directory). **Markdown generator** The repository includes [a set of Jupyter notebooks](https://github.com/academicpages/academicpages.github.io/tree/master/markdown_generator ) that converts a CSV containing structured data about talks or presentations into individual markdown files that will be properly formatted for the Academic Pages template. The sample CSVs in that directory are the ones I used to create my own personal website at stuartgeiger.com. My usual workflow is that I keep a spreadsheet of my publications and talks, then run the code in these notebooks to generate the markdown files, then commit and push them to the GitHub repository. How to edit your site's GitHub repository ------ Many people use a git client to create files on their local computer and then push them to GitHub's servers. If you are not familiar with git, you can directly edit these configuration and markdown files directly in the github.com interface. Navigate to a file (like [this one](https://github.com/academicpages/academicpages.github.io/blob/master/_talks/2012-03-01-talk-1.md) and click the pencil icon in the top right of the content preview (to the right of the "Raw | Blame | History" buttons). You can delete a file by clicking the trashcan icon to the right of the pencil icon. You can also create new files or upload files by navigating to a directory and clicking the "Create new file" or "Upload files" buttons. Example: editing a markdown file for a talk  For more info ------ More info about configuring Academic Pages can be found in [the guide](https://academicpages.github.io/markdown/), the [growing wiki](https://github.com/academicpages/academicpages.github.io/wiki), and you can always [ask a question on GitHub](https://github.com/academicpages/academicpages.github.io/discussions). The [guides for the Minimal Mistakes theme](https://mmistakes.github.io/minimal-mistakes/docs/configuration/) (which this theme was forked from) might also be helpful.